The Federation of Royal Colleges of Physicians of the UK – and the trainees, patients and health services – rely on the MRCP(UK) to deliver exams that are truly world class.

Some facts and figures:

- More than 2000 physicians are involved in developing and delivering the examinations each year, as well as the staff in MRCP(UK) Central Office, the examination teams based in Edinburgh, Glasgow and London and regional organisers.

- We deliver 15 exams, which include questions from…

- …our bank of more than 30,000 questions

- More than 20,000 candidates sit the exams each year…

- …in over 300 centres worldwide.

MRCP(UK) has a number of processes in place to ensure that its examinations are high quality, both in terms of the standards they set, and the methods by which they are delivered. Physicians play a number of roles in the examination process, from writing questions, to examining candidates’ clinical examination and communication skills. Many sit on the examining boards that oversee the each individual examination.

It is crucial to ensure that the exams are shaped by physicians who reflect the NHS and medicine at its best. We work with them to:

- Set national standards for the profession

- Ensure that questions are up-to-date and relevant to clinical practice

- Create exams that test the level of knowledge required, are set to the right standard, and are supported by rigorous processes

- Represent the workforce in terms of gender, ethnic diversity, age, specialties and experience.

The colleges and MRCP(UK) Central Office operate standardised processes for recruiting, training and monitoring all of the physicians involved in the examinations. A succession planning process ensures that there is orderly turnover of members on the various boards and committees, and all vacancies are subject to open recruitment and appointments made on the principle of ‘best person for the job’.

Written examinations (MRCP(UK) Parts 1 & 2, SCEs)

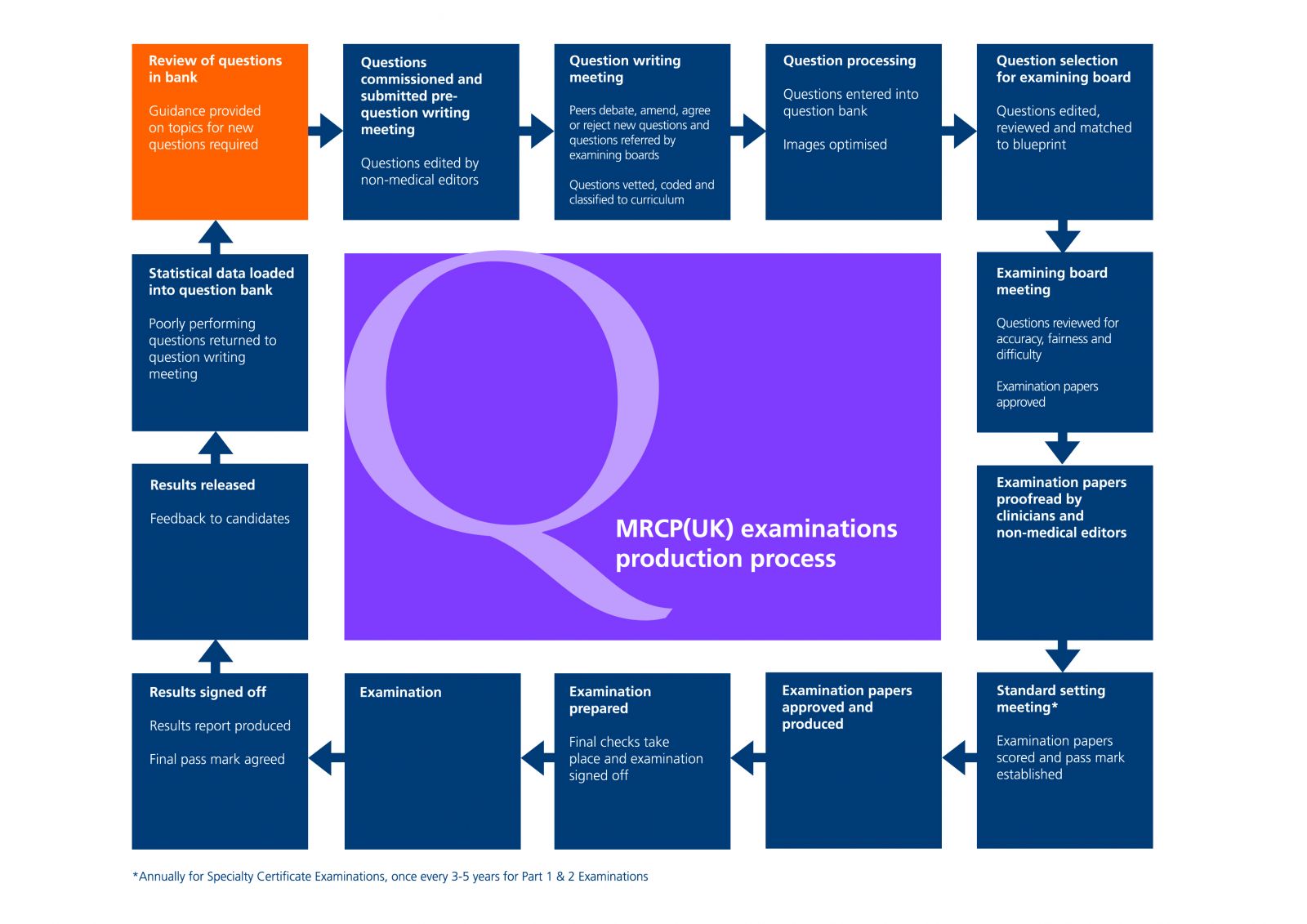

Before a candidate sees an examination paper, each question will have been through a number of stages, from initial drafting to consideration of how likely a borderline candidate will be to pass it. This diagram illustrates the stages in the process.

Question writing

Question writers submit draft questions for editing before the Question Writing Group.

Peer groups discuss new questions, and any questions that have been returned for review by the standard setting group or exam board.

Examination Board overview

The Examining Board considers each question prior to its appearance in the papers and subsequently reviews the question's performance.

The Board Secretary selects 200–500 questions that map to the blueprint, and these are allocated to board members for review. The questions are discussed the board meetings. A detailed analysis of the responses to each question (including a separate index of discrimination) and a coefficient indicating the internal reliability of the Examination as a whole are also considered by the Board. In light of these analyses, the Boards decide whether questions are accepted, rejected, deferred or revised.

Three stages of editing and proofreading are undertaken by medics and non-medical editors before the final question paper is signed off for print.

As well as discussing the actual questions, the board discusses academic issues such as the performance of the last exam, and any appeals.

Standard setting

A ‘modified’ Angoff process is used to set the standard for the Part 1 and Part 2 written examinations and Specialty Certificate Examinations (SCEs). Standard setters are asked to score each question with the percentage of borderline trainees who will know the correct answer. These scores are collated and presented at the standard setting meeting. After discussion, the standard setters again score the questions, and the mean of these post-discussion scores is used to set the standard.

Results and equating

Results are only released once the Chair and Medical Secretary of the relevant Examining Board are satisfied that the Examination has been conducted appropriately and in accordance with the procedures of the Royal Colleges of Physicians.

Candidates’ overall results are calculated using a process called equating for Part 1 and Part 2 written examinations. Equating is a statistical procedure used to adjust scores to account for the varying level of difficulty of test forms or diets. MRCP(UK) uses equating to ensure that candidates receive comparable results for comparable performance in different diets of the examination. This document gives an overview of equating.

For SCEs the pass mark is determined after the examination using the Hofstee compromise method. This takes account of candidate performance in the examination alongside judgements made by standard setters.

Clinical examination (PACES)

The Clinical Examining Board (CEB) oversees all elements of the MRCP(UK) Part 2 Clinical Examination (PACES) and, along with the PACES Standard Review Group, has a key role in managing the quality of the clinical examination by ensuring that that the academic objectives of assessment for the examination are met.

CEB reviews the results and considers recommendations from the annual meeting of the PACES Standards Review Group, reviews reports on the delivery of the examination in the UK and internationally, and develops policies affecting the delivery of the examination, for example examiner criteria and feedback to candidates.

PACES is delivered through an international network of over 120 host centres, and the processes for setting up and running these centres are vital to the maintaining the quality of the examination.

A Chair of Examiners, who is external to the host centre, is appointed for each examination day, and is responsible for ensuring that the exam is conducted according to regulations. There are two examiners in each station. Examiners mark each candidate independently and assess each separate skill using the three-point scale of satisfactory, borderline and unsatisfactory.

Although very different to the written examinations, the clinical examinations share similar processes in terms of writing and reviewing standardised scenarios (those used at Stations 2 and 4 of the examination). The Scenario Editorial Committee (SEC), which includes a communications adviser, an ethicist and trainee and lay representatives, reports to the CEB. The SEC is responsible for ensuring a sufficient supply of high quality, appropriate scenarios for use at the two ‘talk’ stations within PACES, which it achieves by:

- Overseeing the work of the scenario writing group

- Reviewing performance of scenarios, editing and approving scenarios for use in each diet of the examination.

At other stations, real patients appear in the examination, and the process for ensuring quality rests with the examiners on the day who agree how the standards apply to the specific patient presenting to the candidate on that specific day. This process is known as calibration. In addition, host examiners produce scenarios based on real patients for Station 5, which are vetted to ensure quality before use by the organising college. A Chair of Examiners is appointed by the College for each centre.

In order to keep this operation running like a ‘well oiled machine’ we have a number of checks in place to ensure that we are maintaining the quality of the examinations. These include candidate surveys, monitoring appeals and complaints.